Anyone who has done any appreciative work in software engineering is familiar with one of the most challenging aspects of Software Development: the realization that software engineering isn’t fundamentally really about building so much as it is about discovering. We are taught for long periods in our careers (either implicitly through the language we use to describe our work or explicitly through the allegories offered to explain examples) that the metaphor for our work should be that of constructing buildings. But the truth appreciated by all who have been in this business for any significant time is that software engineering is really more an act of discovery than an act of construction.

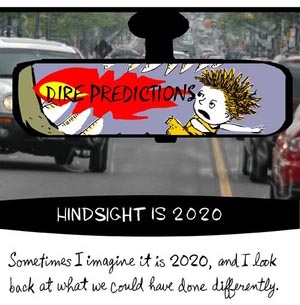

20-20 Hindsight

At one time or another we’ve likely all found ourselves saying…

If only I knew then what I know now, I would have done thus-and-such differently on this project!

Software development processes, techniques, and values that come from schools of thought like Agile, Lean, Scrum, XP, and many others all do their best to try to minimize this affect by encouraging us to write loosely-coupled, well-isolated, cohesive code, ensure we have adequate feedback mechanisms in place like unit tests, continuous integration, on-site customers, and more.

Software development processes, techniques, and values that come from schools of thought like Agile, Lean, Scrum, XP, and many others all do their best to try to minimize this affect by encouraging us to write loosely-coupled, well-isolated, cohesive code, ensure we have adequate feedback mechanisms in place like unit tests, continuous integration, on-site customers, and more.

But in the end you eventually have to commit to one or another decision-point and nobody (I don’t care how good or experienced you are) will make every decision perfectly when reviewed from the perspective of hindsight. The very fact that you need to look back at past decisions and review them with the caveat “I made the best choices I could given the information that I had at the time” implies that there has been better information surfaced later that would (potentially) have changed your decision had you access to it sooner.

Build One to Throw Away

This realization that more, better information always surfaces later in a project has lead to the popular (well, maybe ‘popular’ isn’t the right word 🙂 ) concept that the best way to build good software is to first ‘Build One to Throw Away’. While the people holding the purse-strings for projects might bristle at the suggestion that the business should pay for TWO solutions when just one would do, the truth is that that many other professions do exactly that all the time — they just call it by a more attractive and acceptable term: prototyping.

This realization that more, better information always surfaces later in a project has lead to the popular (well, maybe ‘popular’ isn’t the right word 🙂 ) concept that the best way to build good software is to first ‘Build One to Throw Away’. While the people holding the purse-strings for projects might bristle at the suggestion that the business should pay for TWO solutions when just one would do, the truth is that that many other professions do exactly that all the time — they just call it by a more attractive and acceptable term: prototyping.

Popular in much of Industrial Design (as well as other fields), the notion of prototyping isn’t only an acceptable norm but is very nearly always required by the product design process. Consider as an example the design of a piece of furniture, let’s say an office chair. Without building (and testing) one or more prototypes, you cannot really know if the chair will be comfortable for its users. Just as with software, you can rely on rules-of-thumb, bodies-of-knowledge, and other historical precedents (including your own past experiences), but in the end the only way to really know if the chair will work is to build and test a prototype. Otherwise, the furniture designer is just guessing whether the chair will actually work as a product.

Consider also that the building of the prototype may uncover all kinds of other potential issues that may have less to do with needs of the ‘user’ of the chair but more to do with the economies of fabricating the chair in a factory. As an example, the process of building the prototype may uncover that the factory cannot efficiently assemble the chair on the assembly line unless accommodation is made for larger screws and bolts…but those larger screws and bolts might conflict with the positioning of the chair’s seat such that its ergonomics are negatively affected. The allegory in software engineering is the ‘works-great-on-my-machine’ scenario where the production system –once loaded with terabytes of actual data and working with a database server that’s now located across a higher-latency network – ‘surprisingly’ ceases to perform acceptably any longer. You simply cannot know these challenges without building a prototype first as an exercise in learning both what to do and what not to do, whether its an office chair or a software system.

Et Tu, DX_SourceOutliner?

What, you might ask, does this have to do with the DX_SourceOutliner project? Well, when it was originally devised it had a pretty narrow focus and set of target features that went something like this (at a high-level)…

- support all of the features of the Visual Studio Source Code Outliner Powertoy by Microsoft

- blend it with most of the filtering features of the CodeRush QuickNav tool

- limit the scope of the treeview to the currently-active code editor file

- use it as a learning experience to better familiarize myself with the DXCore Visual Studio extensibility API wrappers

- make it available to anyone else who wanted it free of charge (give back to the community)

- get something that worked well/fast first and could be extensible and a great project for my resume as a very distant second and third goal

As with most people, my foresight is nowhere near as powerful as my hindsight and this above list of ‘features’ might have been the result of my foresight, but the suggestions of others combined with my own 20-20 hindsight have begun to suggest to me some of the design choices I made in the present DX_SourceOutliner are negatively impacting my ability to maintain and extend it as I want to be able to in response to clever suggestions for new features from the community.

Challenges in Filtering the TreeView

Shortly after its release, I received some feedback from several people evaluating the tool that they were somewhat surprised that toggling the FILTER control ON resulted in the treeview being ‘collapsed’ or flattened in the window. Some have called this collapsed view the ‘listview’, but if you look closely you will actually see that this is still a treeview but its one where all nodes are ‘leaf’ nodes (e.g., containing no children) and are themselves rooted at the base of the tree structure.

This was a design decision that I made early on in order to address a very squirrely problem that faced me in the design of the tool: how to react if a child, grandchild, great-grandchild, or other ‘child’ or ‘leaf node’ passed the filer condition but its parent, grandparent, great-grandparent, or other ‘parent’ or ‘higher node’ didn’t pass the filer condition. As just a simple example, since its possible to filter by ‘element type’ (e.g., show methods only, etc.), what would the tree look like if you toggled show-methods ON but show-classes OFF? Clearly methods are contained in classes (at least in C# and VB.NET) and so the treeview would be stuck trying to render the child ‘method’ nodes without their parent ‘class’ nodes in the tree.

How would that be reasonably expected to work? You can imagine that it gets worse when you consider that SOME classes might remain visible and others not depending on the text entered into the filter pattern textbox. How would methods then be rendered? Should those method nodes with visible parent class nodes appear under their parent nodes but those nodes with non-visible parents somehow just be left ‘floating’ in the tree, parentless? Or rooted at the base level? Or collected under a new ‘orphans’ node…? Or….?

You can see how the complexity escalates when you start to consider all the parameters; some of these ideas are perhaps better than others but all could be considered ‘valid’ ways to address this kind of challenge.

As a way to ‘solve’ all these complex problems (or at least make it so they didn’t need to be solved), I decided that the treeview would simply be collapsed into a flat, all-nodes-at-the-same-root-level hierarchy whenever filtering was enabled (yeah, I kicked the can down the road so I didn’t have to deal with it for now 🙂 ). But then, of course, I got the frequent comment, bug-report, enhancement request, etc. to somehow retain the treeview structure when the filter is activated. And so I couldn’t ignore it any longer (well, I could of course, but only by being non-responsive to the needs of my user-community – not a really healthy choice for an OSS project).

Reacting to Complex (simple?) Feature Requests

In software design, there are two extremes in approaching how you support future flexibility. On the one end of the spectrum is the just-write-it-and-re-write-it-again-if-we-need-changes crowd. On the other end of the spectrum is the make-everything-configurable crowd. Since neither extreme makes any sense as a hard-and-fast approach, usually a balance between the two is often struck where some things (expected to change) are configurable (or at least isolated and extensible) and some things are simply fixed in place and difficult to change. Yes, in a perfect world all components of the software would remain uber-isolated so that anything could be extended without any impact on the rest of the source code, but all engineering (software engineering too) is at least partially about reasonable compromise of the ideal in the right places and so this perfect world just doesn’t exist for most real-world projects.

In software design, there are two extremes in approaching how you support future flexibility. On the one end of the spectrum is the just-write-it-and-re-write-it-again-if-we-need-changes crowd. On the other end of the spectrum is the make-everything-configurable crowd. Since neither extreme makes any sense as a hard-and-fast approach, usually a balance between the two is often struck where some things (expected to change) are configurable (or at least isolated and extensible) and some things are simply fixed in place and difficult to change. Yes, in a perfect world all components of the software would remain uber-isolated so that anything could be extended without any impact on the rest of the source code, but all engineering (software engineering too) is at least partially about reasonable compromise of the ideal in the right places and so this perfect world just doesn’t exist for most real-world projects.

When you’re developing an OSS project, usually you are bereft of official stake-holders who can help inform the decisions about where you need to remain flexible and where its reasonable to assume long-term stability in your code. Sure, you can guess about this, but a lack of focus groups, clear business requirements, and even actual budget constraints leave the decisions about how-much-is-enough to be made in a (relative) information vacuum. Certainly as the author of the code, you can hope to guess right about such things, but in the end its still just a guess.

Subsequent to the initial release of the DX_SourceOutliner, I have received suggestions for several features (some since-included, others not yet) like this:

- permit the treeview to show content from all open document tabs in the code editor instead of just the active document

- permit the treeview to retain its structure when the filter is active

- permit the treeview to only show ‘leaf nodes’ (nodes with no children) when the filter is active

- allow the definition of either or both ‘whitelisted’ file extentions to include and/or ‘blacklisted’ file extensions to ignore in the treeview (e.g, parse .cs and .vb files, but ignore .js, .aspx, etc. files)

- others

None of these did I foresee as becoming requests when the initial application (v1.x) was under development because they didn’t really fit my own ‘world-view’ of how I imagined making use of the tool; I simply didn’t conceive of the use-cases that these kind of additional features would be designed to support.

But when you discover that responding to new feature requests for your software system is a much higher-friction endeavor that it feels like it should be, its often time to stand back and reconsider whether your design is sufficiently extensible and adaptable in the proper places. If you are receiving feature requests that would require you to modify the very parts of the system that were expected to be unlikely to change, its an indication that the design may not properly support the right flexibility in the right place (e.g.., that you guessed wrong about where the software needed flexibility to change).

The ‘Broken Window’ Syndrome: How Sloppy Begets More Sloppy

Because of the tight coupling of the DXCore runtime to Visual Studio, shortly after the onset of the development cycle for the DX_SourceOutliner it became clear to me that many of the usual techniques that I rely upon to develop software weren’t going to be entirely feasible in this project.

Because of the tight coupling of the DXCore runtime to Visual Studio, shortly after the onset of the development cycle for the DX_SourceOutliner it became clear to me that many of the usual techniques that I rely upon to develop software weren’t going to be entirely feasible in this project.

Test-Driven Development, when executing your tests requires a separate debug instance of Visual Studio to attach to, just wasn’t going to be all that efficient. Then couple this with the challenge that once a DXCore plugin is loaded into Visual Studio for debugging the debug Visual Studio instance holds an exclusive lock on the assembly you’re testing and so you have to completely shut down the debug instance of Visual Studio in order to make a change to your code and recompile. Even in the presence of the best of hardware, this is going to be a horribly inefficient code-test-debug-recode feedback cycle.

And then, I got sloppy. Really sloppy. Its funny how the loss of one disciplined technique (TDD) can lead you to then discard most other disciplines in your technique. Faced with no TDD (and the almost impossible-to-abstract-away dependency of DXCore on the Visual Studio runtime to host it), I made some poor, lazy design choices — each one building on the previous…

- I didn’t ‘bother’ with developing the Tool Window form using a tried-and-true Model-View-Presenter (MVP) pattern; since I couldn’t test the Presenter in the absence of the View (the window running in VS), I reasoned, why bother to construct a Presenter in the first place?

- I didn’t ‘bother’ to create higher-order abstractions (a ‘Domain Model’) of the problem domain my software was operating to solve; since I couldn’t eliminate the hard dependency on the DXCore-provided abstractions (like LanguageElement, CodeElement, Method, Class, whatever), I reasoned, why bother to abstract just about anything away from working ‘in the weeds’ with the ‘native’ low-level constructs?

- Since my problem was mostly procedural (e.g., ‘processing’ code elements into a treeview), why bother much with object-oriented approaches to solving the problem? Why not just code ‘procedurally’ in an OO language (C#)? Who cared, I reasoned, so long as the thing worked?

Dumb, dumb, dumb…and I kick myself for the (wrong) choices I made but in fairness, I didn’t have the benefit of 20-20 hindsight – how could I know that all of these decisions were going to prove to be technical debt [TODO: TECHNICAL DEBT LINK] that would all too soon weight so heavy on me that I would have to consider seriously re-architecting much of the structure of the existing tool in order to make it even remotely feasible to consider any of the changes needed to support these new feature requests?

But that’s the point – you NEVER have the benefit of 20-20 hindsight until its too late…which is why you NEVER cut these corners unless you know what you’re doing to yourself…and even then you think once, twice, three, and probably even four times before you do such things. Or at least you should (mea culpa).

Technical Debt, meet Technical Bankruptcy

So now I’m ready to declare ‘technical bankruptcy’ (my term) which, extending the metaphor of ‘technical debt’, is what you get when you decide that the only way to effectively deal with the technical debt is to declare bankruptcy, disassemble the existing project, and ‘sell off’ (extract for re-use) the individual parts that are still of some value.(much as a bankruptcy court does with too-far-gone business).

So now I’m ready to declare ‘technical bankruptcy’ (my term) which, extending the metaphor of ‘technical debt’, is what you get when you decide that the only way to effectively deal with the technical debt is to declare bankruptcy, disassemble the existing project, and ‘sell off’ (extract for re-use) the individual parts that are still of some value.(much as a bankruptcy court does with too-far-gone business).

More on how I’m planning to accomplish this and the architectural changes that are afoot next time, stay tuned~!

Hi Stephen

As always, a facinating insight into how a developers mind works. I find that it is one of the most difficult things to do is to declare technical bankruptcy as you descibe, usually because of the amount of time and energy that has gone into the project often clouds our jugement.

Regards

Nathan

[…] a past post, I mentioned that I am in the process of declaring ‘Technical Bankruptcy’ on my […]

[…] Recent Comments sbohlen on DX_SourceOutliner v 1.2 is Releasedgz on DX_SourceOutliner v 1.2 is Releasedsbohlen on DX_SourceOutliner v 1.2 is Releasedgz on DX_SourceOutliner v 1.2 is ReleasedUnhandled Exceptions » Blog Archive » DX_SourceOutliner v2 Design Concepts on DX_SourceOutliner vNext: ‘Technical Bankruptcy’ and ‘Write One to Throw Away&rsquo… […]

[…] link is being shared on Twitter right now. @sbohlen, an influential author, said @briandonahue I don’t […]

[…] as I am redesigning much of the tool’s overall code structure as detailed in these past posts here, here, here, and […]