Well, the event evaluation forms for last weekend’s NYC CodeCamp are IN and have been tallied. And the winner is…

Just kidding. There is no winner because this year we decided to do a few things differently than in years past:

- Eval forms didn’t contain numeric scoring for pre-defined categories (e.g., “Speaker was knowledgable about their topic”, “Speaker was Professional”, “Session met my expectations”, etc.)

- Eval forms weren’t anonymous

- Completion of their Eval Form was a pre-requisite for each attendee’s participation in the SWAG being raffled off in the Closing Session

The decision to eliminate numeric rankings was driven by several motivators:

- numbers (by themselves) don’t represent information that a speaker can use to improve their presentation content and/or their presentation skills (e.g., if you get a ‘1’ instead of a ‘10’, that might tell you that you suck, but its not helpful at all in telling you how to improve for the next time)

- Even if you provide ‘comment space’ in addition to number ranking scales for attendees to complete, only a VERY few will ever bother to provide comments since MOST will consider the ‘number’ speaks for them. By completely eliminating the numeric scale entirely, the ONLY way for attendees to provide feedback was to write SOMETHING in the comment space, ensuring MOST would indeed write comments

Since forms didn’t contain numeric rankings of pre-canned questions, there is no way to declare a ‘winner’ (most-highly-rated session, etc.). Which is probably a good thing because IMO there’s no point in turning “best-speaker” into a competition and we had no ‘prize’ to give away for that anyway 🙂

The Process

Rather than individual forms being used for each session, each attendee was given a single form (the relevant portions of which are reproduced below) and asked to keep it with them as they moved from session to session throughout the day.

Attendees would fill out the relevant info as they attended each of their desired sessions and return the completed form to the organizers right before the SWAG raffle at the Closing Session.

There was some question about how well this would work before we did it. Some of the concerns were:

- Would attendees be comfortable completing a non-anonymous Eval Form that had their name + email address on it?

- Would attendees take the time to write anything useful in the comments section since it takes so much more time to do that than simply circling a number from 1-10 in response to predefined questions?

Gratifyingly, the answer to both of these seems to have proven to be a resounding YES.

It Wasn’t Perfect, but it DID Work

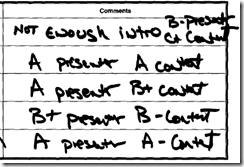

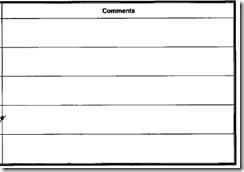

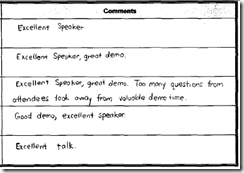

As you can see from some of these following less-than-detailed comments (sessions cropped in the interests of protecting the speakers) it didn’t work perfectly in every case…

…but for the most part, the comments were both detailed and helpful for the speakers in re: the impressions of their sessions. And yes, that last example where every comment was blank is from an actual submitted form – fortunately it was one-of-a-kind and all others were completed to a surprisingly high degree of detail. If you’re the one who submitted the ‘blank’ form, shame on you for cheating the speakers out of valuable feedback from you!

Here’s an excerpt from just one of the comments received on one of the day’s sessions:

Jumped around quite a bit. Would be more effective if built a much simpler example and built it line-by-line by typing the code as going (forced to slow down). Goal is to relay basic concepts and concept down to code. Too fast to the point that large part of the audience seemed not to follow. Speaker knowledge obvious – presentation weak.

Now, whether that’s a widely-felt opinion about the session or not needs to be inferred by comparing all the other attendee comments about that session that were received and considering them in their totality of course, but that level of specificity and actionable feedback to the speaker is just not something you’re going to be able to get if the attendee were limited to a 1-10 scale! 🙂

Other Interesting Excerpts

There were some other real gems in the comments too, of course. Some of my favorites (again, specific session elided to protect the speakers)…

Very good speaker. Humorous. Presentation could have used more attractive examples. Exaggerates a bit.

Not sure what was unattractive about the examples, but I’ll just leave it at that!

Wow. Nothing else can be said. Seriously, wow.

Sorry I missed that session!

Sucked. Left after 10 min.

Apparently glad I missed that one!

Great and informative session. Info was immediately useful in day-to-day work.

Probably one of the most complimentary things you could say about a session. Immediately useful value! WIN!

Lots of code; in depth – may not be appropriate for absolute beginner (me) in the topic. Speaker is passionate and tries to convey as much info as possible.

That’s the kind of feedback that a speaker can act on: “If I was targeting noobs in the topic, I may have lost some people at one point. I should evaluate whether I’m starting with simple enough basics or not…”

Great content – well focused, deft handling of questions. Sith Lords = Great Presentation.

Love the reference to the Sith Lords in that one!

Good info. Presenter needs to tailor slide deck such that code examples are reached more quickly. Too many questions generated by theory. When running low on time he should be willing to hold questions.

Again, specific feedback providing actionable intelligence to the speaker. What went wrong, suggestions on how to perhaps deal with it in the future. This kind of feedback is invaluable to a CodeCamp (or any other!) speaker when trying to improve their delivery!

Funny and entertaining. Would have preferred covered more data and less styling.

Sounds like we have a budding comedian on our hands here… 🙂

Amazing. He knows what he is talking about.

Not 100% sure about what about that is amazing… 🙂

Boring subject, even worse presenter (not engaging whatsoever)

Ouch. Sounds like a mis-match between expectations and delivery in there somewhere 🙁 More work to do!

Content is good. The delivery is too detailed. If using a simpler example, it would be better.

Again, specific detailed commentary that a speaker can actually use to improve their delivery! Great stuff!

Patterns Observed in the Evaluation Feedback

When reviewing the evaluation feedback in its entirety, there are a few things that come to light as repeating patterns in the data…

- some sessions have near-consistent feedback re: ‘generally positive’ or ‘generally negative’

- some sessions have wildly-varying feedback re: ‘generally positive’ or ‘generally negative’

Consistent POSITIVE or NEGATIVE Feedback

For those with near-consistent positive or negative feedback across many attendees comments, I think its reasonable to take that on its face: each such session in question probably was generally either ‘good’ or ‘bad’ and the speaker should either be satisfied with their session or should probably consider rethinking their content, their delivery, or perhaps both depending on the comments.

This is where having detailed comments really shines in re: value for the speaker: if a speaker really wants to improve their presentations for the future, then they should have little doubt about where to focus their energies based on the detailed comments from attendees.

Wildly-Varying Feedback

But another troubling theme that I think we have to acknowledge in the data is that of sessions where the feedback tended to vary wildly among attendees. By way of illustration, here are four comments from four different attendees all about the same session…

Interesting presentation, very knowledgeable presenter.

I did not like the way the material was presented.

Not useful. Boring.

This presentation was AWESOME. This is the kind of presentation that I will be reviewing as soon as I get home. It was chock full of deep/rich content. The presenter was smart, cool, and funny! Wish this was taped!

This wide disparity in feedback points to one probable underlying cause: a mismatch between some attendees’ expectations for the session and the session’s actual content/delivery by the speaker.

Too Advanced? Too Beginner?

The preceding four comments are all from one of our more advanced sessions/topics of the day, but a similar pattern in disparity of feedback was observed with the comments from some of our more ‘beginner’ or ‘entry-level-introduction’ sessions too. The one common thread identified from the parsing of feedback from such sessions strongly suggests that the disconnect is the result of insufficient indication in session abstracts as to their expected ‘level of difficulty’ (or expected prerequisite knowledge) for attendees.

This challenge is a recurring theme for CodeCamp events in that some people will attend session XYZ who already know the basics and are searching for more advanced info, tips and tricks, etc. while others will attend session XYZ with the mindset “I’ve always heard about this topic but know nothing –let me check it out!”

There’s no ‘silver bullet’ to this problem, but there are some concrete changes that we can make for future CodeCamp events to try to better ensure this mismatch isn’t so great and attendees end up in sessions that are either too boring for them or too overwhelming for them:

- Ask speakers to indicate 100, 200, 300 level for their talks (even though this is clearly subjective too, it would at least provide GUIDANCE to attendees about expected familiarity with the topic

- Ask speakers to list out expected prerequisite knowledge for an attendee to get ‘value’ out of their session

I routinely do #2 on all my session abstracts submitted to anywhere I am planning to speak and even though it sometimes makes event organizers ask “Would you mind trimming your abstract down to just a paragraph so we can post it now that its selected?”, I still feel its valuable to be able to define my own expectations for the skills my audience should have to get value out of a session I am delivering.

By way of an illustration, here is the relevant section from the abstract for the talk I just delivered at the NYC CodeCamp myself:

Target Audience: .NET developers interested in learning how what sometimes seem to be abstract OO design principles can actually be applied to improve the structure and flexibility of their own code. The ideal attendee will have several years of .NET (or other OO language) development experience on at least moderately complex systems so that they can recognize the pitfalls of OO design that the S.O.L.I.D. principles are attempting to address.

And here it is again for my upcoming talk at the Philadelphia CodeCamp in April:

The ideal attendee will have several years of object-oriented software development experience on the .NET platform. Familiarity with Interfaces, Classes, inheritance, and polymorphism are assumed. Exposure to common .NET 3.5 constructs such as lambda expressions, anonymous types and methods, and extension methods are required. Attendees should also have had some prior exposure to the mechanics of writing unit tests, although not necessarily in a Test-First/Test-Driven context. Familiarity with IoC containers and dependency injection techniques on the part of the attendee are helpful but not required.

I think that if we are able to elicit this information from speakers in addition to simply a description of the content of their session, then it might be possible to mitigate some of the impedance mismatch that’s never entirely avoidable between sessions and attendees.

Let’s call that ‘Lesson-Learned’ and file it away for next time.

Honorable Mention

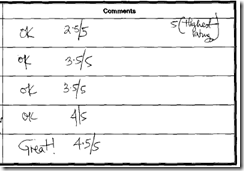

A couple of the evaluation forms deserve ‘Honorable Mention’ for a variety of interesting reasons.

Here’s one where the author’s handwriting looks suspiciously like MS Comic Sans and it stood out well in a stack of forms with terrible handwriting! Kudos to this person for the high-legibility of their handwriting – it would have taken me perhaps 2+ hours to write that so clearly!

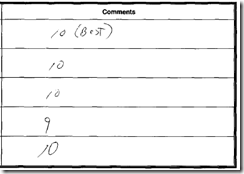

Here’s a fun one where the author wanted to ensure that we understood their rating scale when tallying their results (10 –> best!)

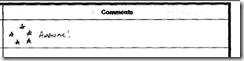

And lastly, here’s one that caught my eye as not only receiving ‘5-Stars’ but apparently earning them in a special pattern too!

General Comments

We also got a considerable amount of ‘general comments’ on the overall event. Happily they were universally positive. Most of them contained comments like ‘well-organized’, ‘great event’, and ‘thanks to the organizers and the sponsors’ but in addition to those a couple caught my eye for whatever reason:

CodeCamp made my day!

…and…

Overall it was a fun and informative day. I’m looking forward to VS2010 now more than ever! I’ll definitely be back next year and I’ll recommend CodeCamp to my friends and co-workers.

Feedback like that makes all the hard work worthwhile, so to all those attendees who took the time to write detailed, informative, actionable comments on their forms I can 1000x assure you that every comment was read, evaluated, and internalized by both organizers and speakers alike!

Thanks so much for taking the time to offer thoughtful, useful input to everyone involved!

(except for you of course, blank-eval-form-submitter-guy/gal!)

Great post…

I was very gratified with the responses. I’ve read some random comments (did you see that person who wrote an entire page of general comments?). I’m going to read all of them this week.

I’m really thinking that eliminating ratings makes for MUCH more useful feedback, and based on this, we’re definitely changing how we do things at Fairfield / Westchester. It should not be a competition — these events should just be a great learning opportunity for everyone — speakers included. Competitions are great for “Speaker Idols” (a terrific event in its own right), but if we can get feedback like this, it’ll just help everyone improve.

Just to clarify a bit — the attendees were not informed that the evaluations would ultimately be anonymous when we sent them to the attendees. Despite that, we had what appeared to be really honest evaluations. I wonder how people would respond if they knew they would end up being anonymous (I stripped out the names and email addresses before sending them to the speakers — we just used those for the raffles). Hopefully, they’d remain constructively critical. That would be an interesting experiment for next year, if we do mention this in the opening session.

I agree 1000% (yes, 10x more than what you say 😉 ) that we should ask speakers for the level and prerequisites next time. Your own abstract was great, and should be used as a model for others. As you mentioned, it does help to have an abbreviated version for posting on the wall, though.

Now that I’ve had more than a week for the event to absorb, I’m about to work on a blog post about the code camp. Of course, the way I write and edit (and re-edit), it may take another week before I post it 😉

[…] If your involved in organizing technical events or speak at technical events I highly recommend you read the article NYC CodeCamp Winter 2010: Session Evaluation Process, Results, and Conclusions. […]